In my last blog post, I described the difference between loosely coupled and tightly coupled workloads, with loosely coupled workloads being ideal for Azure. Now that we know the difference between the workloads, I’ll dive into the basics of HPC architecture in Azure. A typical HPC setup has a front-end for submitting jobs, a scheduler/workload manager/orchestrator, a compute cluster, and shared storage.

Cloud Only Deployments

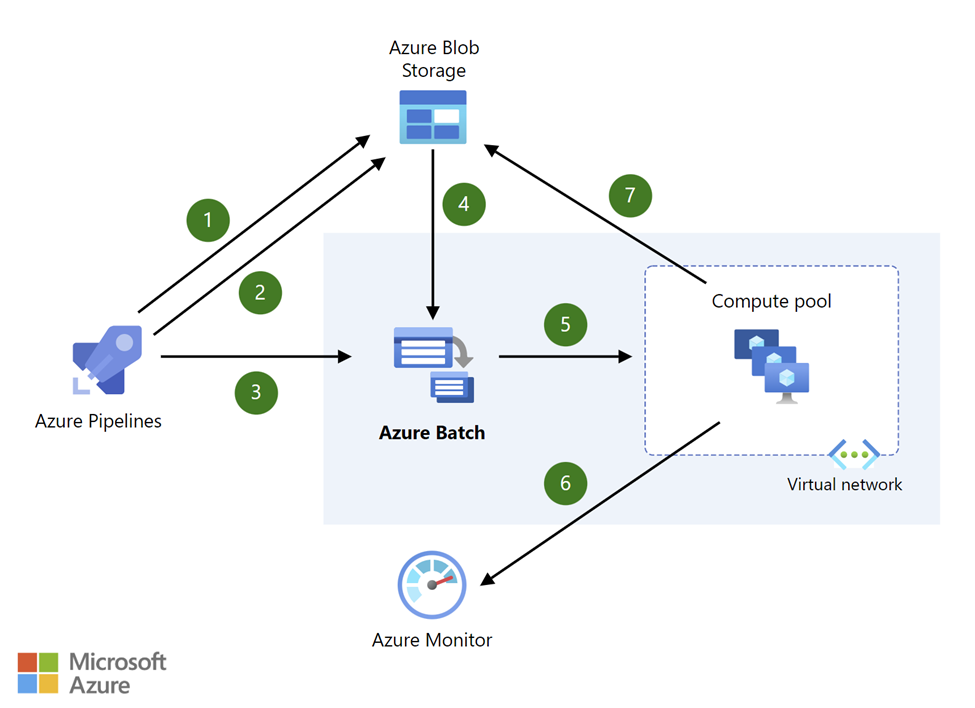

While cloud-only deployments are relatively rare, they provide a good starting point as they are less complex compared to more common hybrid deployments. In a cloud-only deployment, you would typically have a front-end system responsible for scheduling and submitting jobs to your workloads. Here is an example of a simple cloud-only HPC cluster deployment:

The workflow for this is relatively simple as the Azure Pipeline starts a pipeline to compile code and stores it as an executable in Azure Storage. The same pipeline job continues by loading some processing data in the storage account. After that, the pipeline makes a request to Azure Batch to start processing its job completing the pipeline. Azure Batch will then copy the executables and input data and begin assigning it to the pool of compute nodes. The Batch service will keep retrying and reassigning jobs until the nodes complete their work. When the compute nodes complete their task, it will output your data to Azure storage for review. During this, you can use Azure Monitor to collect performance data to better jobs in the future. It is worth noting that this architecture is ideal for loosely coupled workloads. However, Azure Batch also supports tightly coupled workloads using Microsoft Message Passing Interface (MPI) or Intel MPI.

Hybrid Deployments

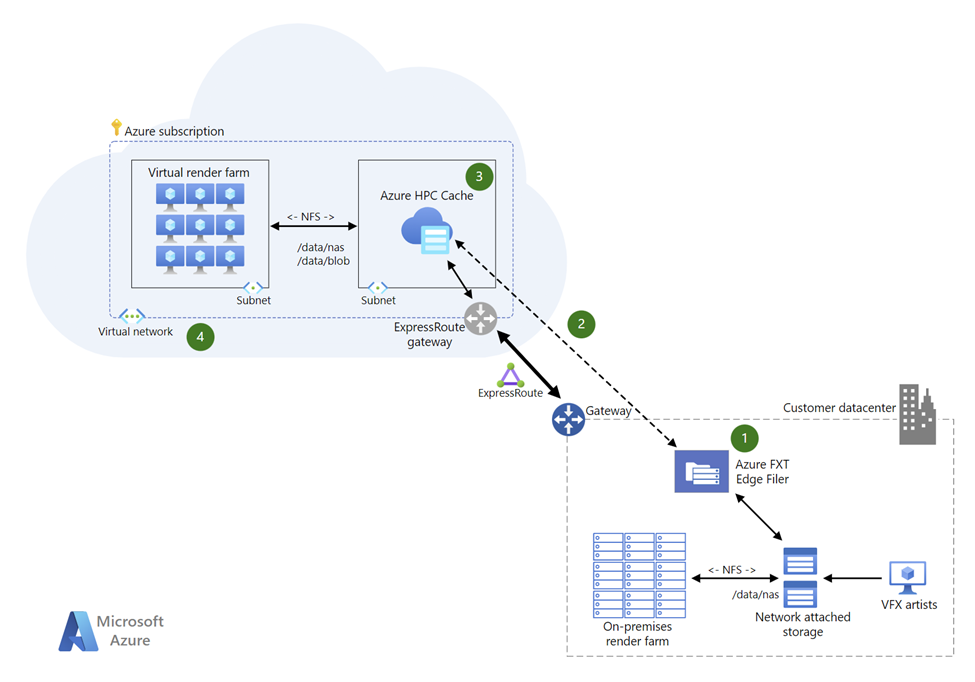

Hybrid deployments introduce a higher level of complexity, requiring careful consideration of various factors including networking, security, and data access capabilities. The first step involves establishing a fast and secure connection between your on-premises infrastructure and Azure. To achieve this, deploying an Azure ExpressRoute or Azure VPN Gateway is highly recommended. By doing so, you can extend your Azure resources and ensure a secure connection. If possible, I strongly suggest using Azure ExpressRoute for configuring your hybrid infrastructure, as it offers optimal performance and reliability. Below is an example of a hybrid HPC Azure deployment for media rendering for artists:

To optimize dataflow and enhance access to NAS files, you can leverage Azure FXT edge filer this enables artists to connect to low-latency storage. Next by using an Azure ExpressRoute, you can then connect your on-premises data resources to Azure to utilize additional cores. Azure HPC Cache provides you with low-latency access to your compute nodes for burst rendering. Azure HPC Cache also supports Azure SDK for easier infrastructure management and automation. To accommodate fluctuating demands and seamlessly burst into Azure, utilizing Azure VM Scale Sets as your render farm is highly beneficial. This setup ensures scalability and responsiveness to meet your rendering needs effectively. There are other components that can be used to simplify management of your hybrid workloads that are omitted such as Azure Batch and Azure CycleCloud which will be discussed further in future blog posts.

In conclusion, these architectural designs are well-suited for loosely coupled workloads and should be adapted based on specific requirements rather than being treated as a one-size-fits-all solution.